${ trio[0] }$ ${ trio[1] }$ ${ trio[2] }$

Search Panel

${ regex_results_heading }$

${ regex_result[0] }$ ${ regex_result[1] }$

No matches were found.

Contents:

- Purpose

- Materials used

- Creation of the corpus

- Corpus functionality

- Corpus statistics

- Browser compatibility

- Licence

- Purpose

The Phonetic Corpus of Audiobooks (PCA) was created, first and foremost, for obtaining audio data that can be used for a variety of phonetic or acoustic research on speech and articulation. There are many general-purpose corpora of the English language available online, such as the Corpus of Contemporary American English (COCA) or the British National Corpus (BNC). They offer written text only, even if it is based on spoken language (for BNC, however, some audio materials may be downloaded with the use of the interface available at http://bncweb.lancs.ac.uk). PCA is unique in that it offers easy access to audio recordings at the sentence level. The user may look for individual words and phrases (see Section 4.1) or speech sound combinations (see Section 4.2). If the search items are located in the corpus database, the fragments of audiobooks in which they can be found will be available for audio playback directly from the web browser as well as for download as mp3 files. These files may later be used for analysis in any speech analysis software. The corpus also allows researchers to narrow down their search parameters according to author, reader and text criteria (see Section 4.3), all of which is useful for sociophonetic investigations.

In addition to the major purposes specified above, the corpus may be used in other types of linguistic research and domains related to natural language.

- Materials used

The audiobooks used in the corpus were downloaded from "https://librivox.org", which is a non-profit library of free audiobooks recorded by volunteers. The website was initiated in 2005 by Hugh McGuire. It offers more than 12,000 finished projects, most of which are novels in English.

The corresponding text versions of the novels were found at "https://www.gutenberg.org", which offers over 58,000 free e-books in numerous formats. Founded in 1971by Michael S. Hart, it is the oldest digital library.

To find out which audiobooks and e-books were used in this project, as well as other background data and corpus statistics, see Section 5 below.

- Creation of the corpus

At the initial stage of the project, 104 English audiobooks were downloaded from "https://librivox.org". In order to obtain a sample representing different dialects and genders, only these audiobooks were chosen which were read by groups of readers, rather than an individual person. After that, the corresponding text versions of the novels were found at "https://www.gutenberg.org". With the use of a Python script, the texts were divided into syntactically and prosodically independent units. In many cases, these are "orthographic sentences", defined as any portion of text ending in either "." or "!" or "?". In dialogues, however, such simple segmentation does not always work. It is quite common for the narrator to interrupt a given character's utterance, as in the example below:

'Take off your cap, child,' said Miss Betsey, 'and let me see you.' (David Copperfield by Charles Dickens, Chapter 1)

According to the orthographic definition, the fragment constitutes one sentence, but from both the syntactic and prosodic points of view, we are dealing with two separate utterances: the part expressed by the character and the narrator's comment. For such cases, a script in Python was written which divided such sentences into smaller parts. The resulting segmentation for this particular example would be: 1) 'Take off your cap, child', 2) said Miss Betsey, 3) 'and let me see you'.

Next, such "sentences" (or rather "text units") were automatically aligned with the corresponding parts in audiobooks using Aeneas, which is a Python/C library designed to automatically synchronize audio and text. Additionally, with the use of various scripts written in Python, all the text units and the corresponding recordings were classified according to a rage of criteria, such as context (narrator vs. dialogue), pragmatic function (statement/directive vs. question vs. exclamative statement), reader's gender (female vs. male), reader's dialect (North America vs. Great Britain vs. Australia/New Zealand vs. other/non-native), author's gender (female vs. male) etc.

Once the database was ready, several technologies used in web development were utilized to create an online application with an intuitive graphic user interface.

- Corpus functionality

- "Simple" queries

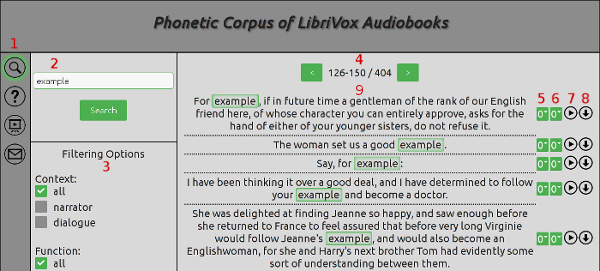

You can start using the corpus by typing a word or phrase in the search bar. An example result is presented below.

1 – the navigation bar, which includes the search icon (searching may be initiated from any other option chosen, but this icon will be activated once the search has started), the help icon, the tutorial icon (you may watch a video tutorial offering a comprehensive introduction to the functionality of the corpus) and the contact icon

2 – search bar

3 – filtering options (see Section 4.3 for details)

4 – pagination panel – "previous page" and "next page". Between these icons, you can see the numbers referring to the currently displayed examples, and after the forward slash "/" the number of all examples of the search term found in the corpus

5 – "left margin" - you may change the default value of 0 to any digit between 1 and 5. This specifies the number of seconds added to the beginning of the audio fragment

6 – "right margin" - you may change the default value of 0 to any digit between 1 and 5. This specifies the number of seconds added to the end of the audio fragment

7 – "play button" - the button opens a new window in which the recording is played. If a new window does not open, change the settings in your browser or disable your ad blocking application.

8 - "download button" - the button initiates a download of the audio file. If the download does not start automatically, change the settings in your browser or disable your ad blocking application.

9 – results section – you may click any of the text units and a new window will open with the details concerning the author, the novel, the reader, the context (narrator vs. dialogue), the function (statement/directive vs. question vs. exclamative statement) and the location of the recording in the source audio file

- Wildcards

At this moment, four wildcards are available:

? for "one character". For instance, the query "an?" returns the following 9 types: and, any, ann, ant, ane, ana, anb, anz and ans. The last three are hapaxes occurring only once in the entire corpus.

* for "one or more characters". For instance, the query "an*" returns as many as 448 types including all the ones found in the previous example and words such as another, anything or answered.

=c for "a consonant letter". The wildcard stands for any letter which typically represents consonants in articulation. These letters are b, c, d, f, g, h, j, k, l, m, n, p, q, r, s, t, w, v, x, z (although w may also be used to represent diphthongs, as in how). For example, the query "an=c" returns the following types: and, ann, ant, anb, anz and ans.

=v for "a vowel letter". The wildcard stands for any letter which typically represents vowels in articulation. These letters are a, e, o, u, i, y (y may also be used to represent the approximant /j/, as in yes). For instance, the query "an=v" returns any, ane, ana.

The "consonant" and "vowel" letters do not represent individual consonant and vowel phonemes because of the large distance between spelling and pronunciation in modern English. These wildcards may be, however, quite useful in narrowing down searches.

All four wildcards may also be freely combined. For example, the query "=c=v=v=c* ?o?" returns a large number of pairs of words. Each first word begins with a consonant letter, followed by two vowel letters, followed by another consonant letter and followed by 1 or more characters. Each second word begins with one character, followed by the letter "o", followed by another single character. The results include phrases such as could not, waiting for and heard you.

- Filtering options

Searches can be filtered according to selected text, reader and author attributes. At the current stage of development, the corpus interface includes the 5 options listed below, but new categories may be added in the future.

- Context

As described in Section 3, the text of the novels has been divided into semantically and prosodically independent fragments, which, in many cases, are "orthographic sentences". In dialogues, however, narrator's comments which interrupt a character's utterance are classified as separate units. With this type of tokenization it was possible to write a script which automatically classifies the text units into either "narration" or "dialogue". The script makes use of numerous punctuation conventions used in dialogues.

In a validation test involving 500 text units taken randomly from the corpus database, only 9 were classified incorrectly. On the basis of this result, the predicted proportion of cases which are misclassified in terms of context is 1.8% (95% CI: 0.88% - 3.51%).

- Function

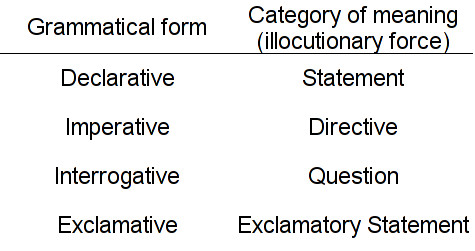

The function variable refers to the pragmatic categories of meaning as proposed by Huddlestone (1988). In Speech Act Theory (Austin, 1962) they are associated with the notion of "illocutionary force".In direct speech acts, they are prototypically linked to grammatical forms in the following manner:

The function distinction refers to the categories of meaning rather than the grammatical forms for two related reasons:

1. The automatic classification is based on punctuation conventions applied in the English language; these reflect the intended meaning rather than the grammatical form. Text units ending with a question mark were classified as questions regardless of whether the speech act was direct (the grammatical form was "interrogative"), or indirect (the grammatical form was "declarative", "imperative" or "exclamative"). The question mark normally signals that the expression is meant to be a question, regardless of the grammatical form. In spoken language, such information is usually conveyed by applying the intended intonational patterns. A similar strategy was used for exclamatory statements marked by the exclamation mark. All other text units were classified as "statement/directive". Unfortunately, there was no reliable way to discriminate between the two categories with the methodology applied.

2. The categories of meaning tend to affect prosodic aspects of voice more than grammatical form (as implied above, a text unit with the question mark at the end is likely to be read using any of the intonation patterns typical for questions, even if its grammatical form is declarative).

- Reader's gender, reader's

dialect, author's gender

The gender for each reader was established on the basis of auditory assessment (by the creator of the corpus). The same method was applied when establishing the reader's dialect, in which case four broad categories were used:

1. Great Britain -for any dialect spoken on the British Isles. Most of the readers falling into this category use Received Pronunciation, but there are also cases in which some readers exhibit characteristics of regional varieties of British English.

2. North America – for any dialect spoken in the United States of America and in Canada.

3. Australia/New Zealand – as the name suggests, for any dialects used in Australia or New Zealand.

4. Other/non-native – other readers were put into this category. Most of them are not native speakers of English.

Even using such broad categories, the dialect classification was demanding and the results may not be fully accurate. This is true especially for the distinction between British English and Australian English. For this reason, the dialect distinctions should be used with caution at this stage of the development of the corpus.

The classification of the author's gender was a straightforward task.

- Context

- "Simple" queries

- Corpus statistics

- Text and audio statistics

The corpus is based on 104 novels written mostly in the nineteenth and early twentieth centuries. They contain 10,407,032 word tokens and were divided into 673,610 text units.

The corresponding audio recordings last over 1,104 hours. The average duration of one audiobook is over 10 hours.

For more details on both the novels and the audio recordings click HERE.

- Reader statistics

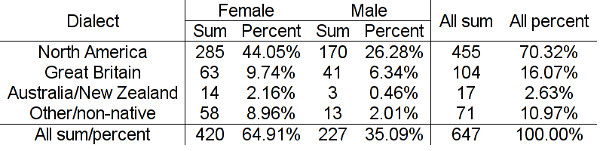

As illustrated in the table above, the audiobooks were read by 647 different readers. The majority of them (455) use one of the varieties of English spoken in the United States of America or Canada. Other dialects are much less popular.

In terms of gender, there are more female readers (420) than male readers (226).

- Text and audio statistics

- Browser compatibility

The corpus makes use of the front-end web technology called "grid". The feature is supported by all major browsers since 2017, but it is not supported in older versions. Therefore, use the latest version of Chrome, Firefox, Opera or Edge to avoid problems with the display.

- Licence

The corpus uses text and audio materials which are in the public domain. They may be used for research and many other purposes. For details visit "https://librivox.org" (for audio recordings) and "https://www.gutenberg.org" (for texts).